After installing the Kinect v2 SDK from here http://www.microsoft.com/en-us/download/details.aspx?id=44561 you can also download the supporting Unity 3D plugins here http://go.microsoft.com/fwlink/?LinkID=513177. Note that the plugins require Unity 3D Pro and they expose APIs for Kinect for Windows core functionality, visual gesture builder and face to Unity apps. The zip file containing the Unity packages also contains two sample scenes Green Screen and Kinect View. Lets take a look at KinectView first:

So, I stumbled around a bit on the next step as initially I tried opening the KinectView scene from it’s existing location and this doesn’t seem to work very well. The result I got was that when I examined the game objects there was an error on each of the scripts. After some head-scratching I watched the video here http://channel9.msdn.com/Series/Programming-Kinect-for-Windows-v2/04 and at about 10:54 the presenter shows copying the scene locally to the current project. That fixed the issue for me so I was back up and running. You can plug in your Kinect sensor and run the game and then explore the scripts to see how the data is retrieved from the sensor and applied to GameObjects in your scene.

Unity And Kinect V2 Point Cloud. GitHub Gist: instantly share code, notes, and snippets. Making Ball game using kinect and Unity3D with the help of ZIGFU plugin.

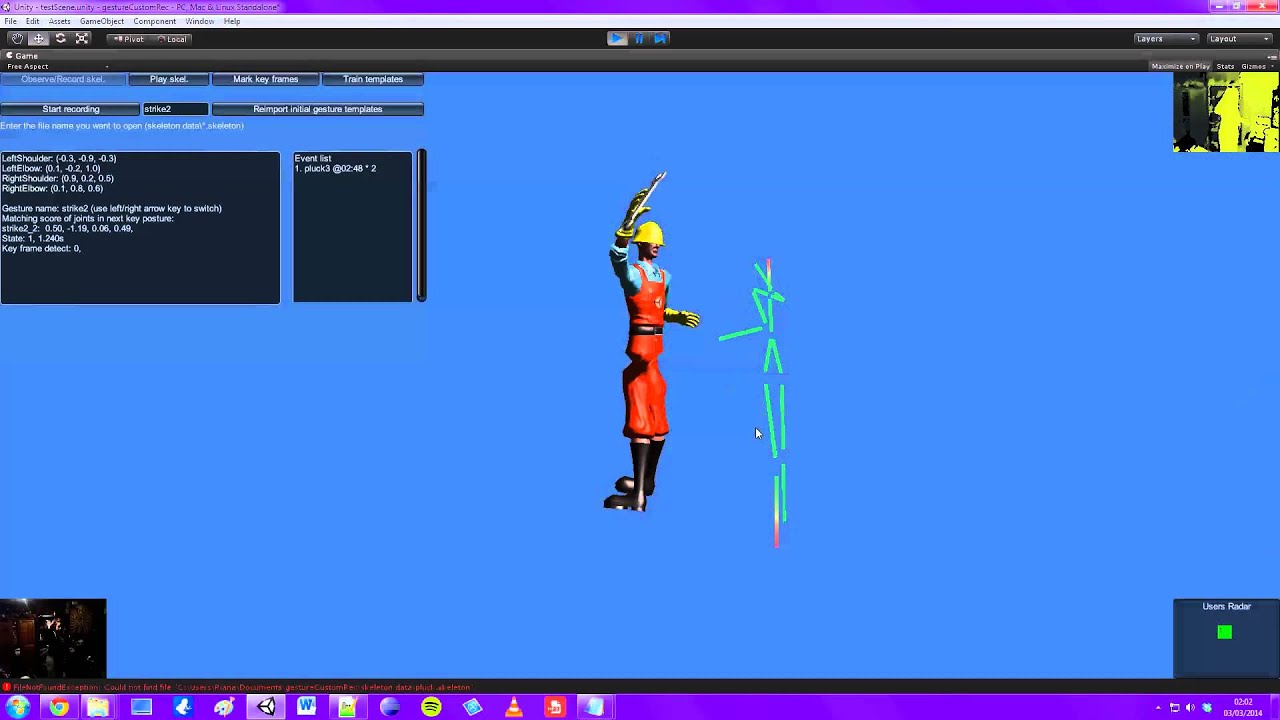

My idea was to create a very simple Kinect sample which will move particle systems around following the positions of the users hands. So lets step through what I did.

First, I chose File > New Project to bring up the Unity project wizard:

I added the Visual Studio Tools as I’m more comfortable editing and debugging in Visual Studio. You can download the tools from here https://visualstudiogallery.msdn.microsoft.com/20b80b8c-659b-45ef-96c1-437828fe7cf2.

Once the project is created I imported the Kinect Unity package; Assets > Import Package > Custom Package… I navigated to where I installed the Kinect package and chose Kinect.2.0.1410.19000.unitypackage.

I left everything selected and imported the various libraries and scripts. The editor shows that I have an empty scene just containing a Camera.

The first thing to do was to try to get the Kinect sensor data into the scene, so to do this I created a new c# script in the project and copied the code from the KinectView sample in the file BodySourceManager.cs. The code retrieves the sensor and opens a reader on it for the body data and also reads the current frame’s data in the scripts update() method. The update() method is called as part of the game loop so we are ‘pulling’ the Kinect skeleton data each time we are called. The class also has a method GetData() to allow the data to be accessed by other scripts.

I created an empty GameObject called BodySourceManager and associated the BodySourceManager.cs script with it so the code to set up the Kinect would be executed. Next, I created another empty GameObject and associated a new script which would get the body data and position the GameObject depending on the position of one of the joints in the skeleton. I made the joint type a variable so I could set up the positions of various GameObjects via the Unity editor. The intention was that if I then add a particle system as a child of this new GameObject then it would follow the tracked position of say, the left hand and I could add others for each joint that I wanted to track.

The code for the update loop showing how the game object position is set from the joint.

- void Update ()

- {

- if (_bodySourceManager null)

- {

- return;

- }

- _bodyManager = _bodySourceManager.GetComponent<BodyManager>();

- if (_bodyManager null)

- {

- return;

- }

- Body[] data = _bodyManager.GetData();

- if (data null)

- {

- return;

- }

- // get the first tracked body…

- foreach (var body in data)

- {

- if (body null)

- {

- continue;

- }

- if (body.IsTracked)

- {

- var pos = body.Joints[_jointType].Position;

- this.gameObject.transform.position =newVector3(pos.X, pos.Y, pos.Z);

- break;

- }

- }

- }

The next step was to add two new GameObjects, one for each hand and set their joint types to HandLeft and HandRight and then add particle system’s to each as a child. Then the particle systems had their parameters tweaked until they looked how I wanted. It seems to me that the combination of Unity and Kinect is a pretty powerful one and I’m looking forward to seeing the results in the Windows Store. Please find the sample project here http://1drv.ms/1tyKlIT.

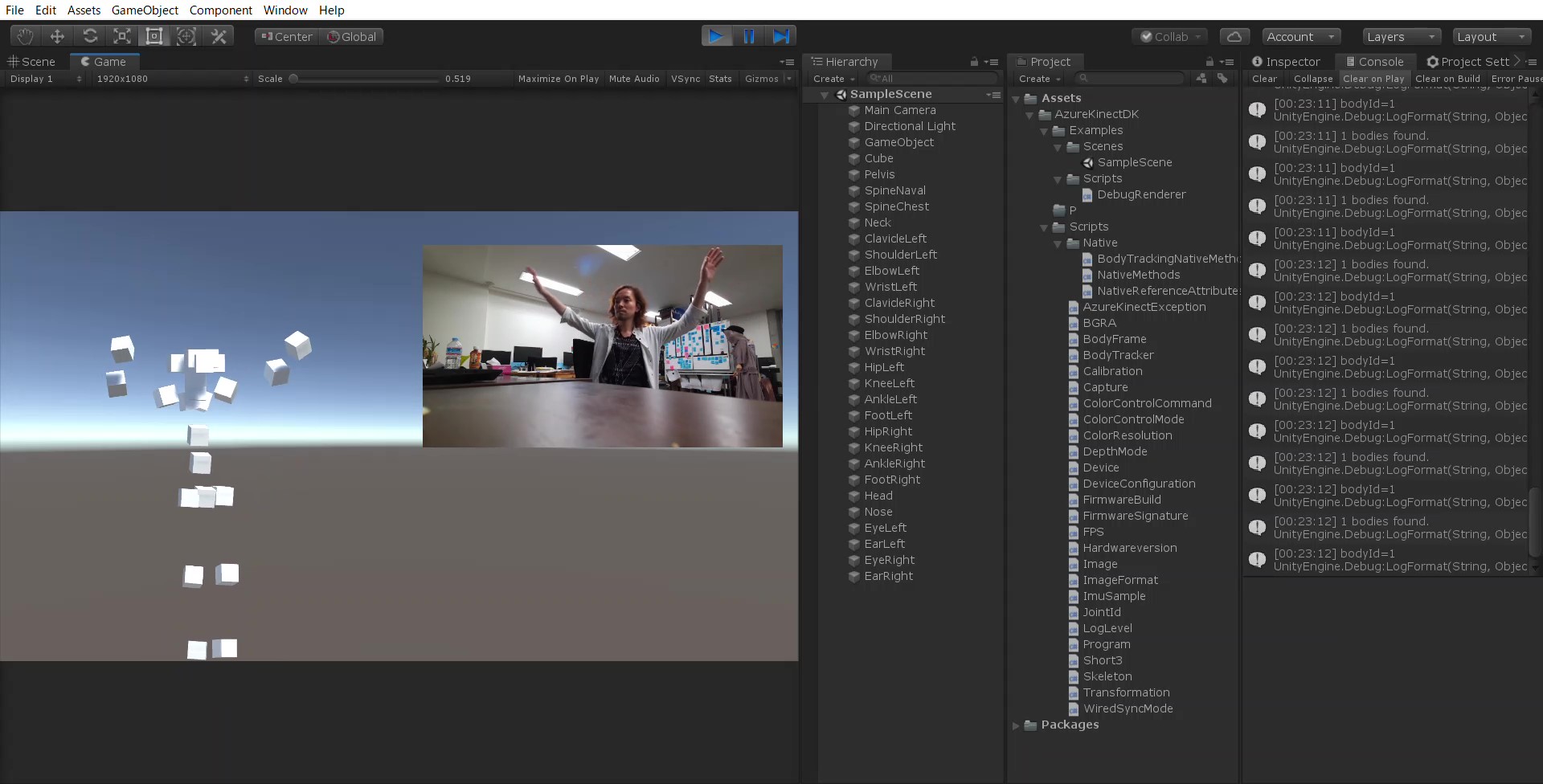

Azure Kinect Examples for Unity, v1.16 is a set of Azure Kinect (aka ‘Kinect for Azure’, K4A) examples that use several major scripts, grouped in one folder. The package currently contains over thirty five demo scenes. Igot a hot room full episode online. Apart of the Azure Kinect sensor (aka K4A), the K4A-package supports the “classic” Kinect-v2 (aka Kinect for Xbox One) sensor, as well as Intel RealSense D400-series sensors.

The avatar-demo scenes show how to utilize Kinect-controlled avatars in your scenes, gesture demo – how to use discrete and continuous gestures in your projects, fitting room demos – how to overlay or blend the user’s body with virtual models, background removal demo – how to display user silhouettes on virtual background, point cloud demos – how to show the environment or users as meshes in your scene, etc. Short descriptions of all demo-scenes are available in the online documentation.

This package works with Azure Kinect (aka Kinect for Azure, K4A), Kinect-v2 (aka Kinect for Xbox One) and Intel RealSense D400-series sensors. It can be used with all versions of Unity – Free, Plus & Pro.

How to run the demo scenes:

1. (Azure Kinect) Download and install the latest release of Azure-Kinect Sensor SDK. The download link is below. Then open ‘Azure Kinect Viewer’ to check, if the sensor works as expected.

2. (Azure Kinect) Follow the instructions on how to download and install the latest release of Azure-Kinect Body Tracking SDK and its related components. The link is below. Then open ‘Azure Kinect Body Tracking Viewer’ to check, if the body tracker works as expected.

3. (Kinect-v2) Download and install Kinect for Windows SDK 2.0. The download link is below.

4. (RealSense) Download and install RealSense SDK 2.0. The download link is below.

5. Import this package into a new Unity project.

6. Open ‘File / Build settings’ and switch to ‘PC, Mac & Linux Standalone’, Target platform: ‘Windows’ & Architecture: ‘x86_64’.

7. Make sure that ‘Direct3D11’ is the first option in the ‘Auto Graphics API for Windows’-list setting, in ‘Player Settings / Other Settings / Rendering’.

8. Open and run a demo scene of your choice from a subfolder of the ‘AzureKinectExamples/KinectDemos’-folder. Short descriptions of all demo-scenes are available in the online documentation.

* The latest Azure Kinect Sensor SDK (v1.4.1) can be found here.

* The latest Azure Kinect Body Tracking SDK (v1.1.0) can be found here.

* Older releases of Azure Kinect Body Tracking SDK can be found here.

* Instructions how to install the body tracking SDK can be found here.

* Kinect for Windows SDK 2.0 can be found here.

* RealSense SDK 2.0 can be found here.

Downloads:

* The K4A-asset may be purchased and downloaded in the Unity Asset store. All updates are and will be available to all customers, free of any charge.

* If you’d like to try the free version of the K4A-asset, you can find it here.

* If you’d like to utilize the Intel RealSense sensor with Cubemos body tracking, please look at this tip.

* If you’d like to utilize the LiDAR sensor on your iPhone-Pro or iPad-Pro as a depth sensor, please look at this tip.

Free for education:

The package is free for academic use. If you are a student, lecturer or university researcher, please e-mail me to get a free copy of the K4A-asset directly from me.

Unity Kinect Motion Capture

One request:

Please don’t share this package or its demo scenes in source form with others, or as part of public repositories, without my explicit consent.

Kinect Unity Sdk

Documentation:

* The basic documentation is in the Readme-pdf file, in the package.

* The K4A-asset online documentation is available here and as pdf.

* Many K4A-package tips, tricks and examples are available here.

Troubleshooting:

* If you can’t upgrade the K4A-package in your project to the latest release, please go to ‘C:/Users/{user-name}/AppData/Roaming/Unity/Asset Store-5.x’ on Windows or ‘/Users/{user-name}/Library/Unity/Asset Store-5.x’ on Mac, find and delete the currently downloaded package, and then try again to download and import it.

* If Unity editor freezes or crashes at the scene start, please make sure the path where the Unity project resides does not contain any non-English characters.

* If you get syntax errors in the console like “The type or namespace name ‘UI’ does not exist…”, please open the Package manager (menu Window / Package Manager) and install the ‘Unity UI’ package. The UI elements are extensively used in the K4A-asset demo scenes.

* If you get “‘KinectInterop.DepthSensorPlatform’ does not contain a definition for ‘DummyK2′” in the console, please delete ‘DummyK2Interface.cs’ from the KinectScripts/Interfaces-folder. This dummy interface is replaced now with DummyK4AInterface.cs.

* If the Azure Kinect sensor cannot be started, because StartCameras()-method fails, please check again #6 in ‘How to run the demo scenes‘-section above.

* If you get a ‘Can’t create the body tracker’-error message, please check again #2 in ‘How to run the demo scenes‘-section above. Check also, if the Body Tracking SDK is installed in ‘C:Program FilesAzure Kinect Body Tracking SDK’-folder.

* If the body tracking stops working at run-time or the Unity editor crashes without notice, update to the latest version of the Body tracking SDK. This is a known bug in BT SDK v0.9.0.

* Regarding RealSense: If you’d like to try its integration with Cubemos body tracking SDK, please look at this tip.

* If there are errors like ‘Shader error in [System 1]…’, while importing the K4A-asset, please note this is not really an error, but shader issues due to missing HDRP & VFX packages. You only need these packages for the Point-cloud demo. All other scenes should be started without any issues.

* If there are compilation errors in the console, or the demo scenes remain in ‘Waiting for users’-state, make sure you have installed the respective sensor SDKs and the other needed components. Please also check, if the sensor is connected.

What’s New in Version 1.16:

1. Added support for Azure Kinect Body Tracking SDK v1.1.0 (big thanks to 葛西浩!).

2. Added optional bone colliders to the AvatarController-component.

3. Updated BackgroundUserBodyImage- & BackgroundColorCamUserImage-components to support individual user indexes (thanks to Tepat Huleux).

4. Fixed SceneMeshDemo and BackgroundRemovalDemo2-scenes to limit the space in camera coordinates (thanks to Tomas Durkin).

5. Fixed the dst/cst transfer issue in case of KinectNetServer running with ARKit sensor interface.

Videos worth more than 1000 words:

Here is a holographic setup, created by i-mmersive GmbH, with Unity 2019.1f2, Azure Kinect sensor and “Azure Kinect Examples for Unity”: